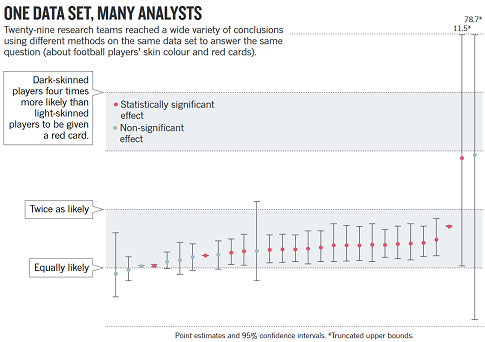

29 groups analyzed the same data set, apparently in many different ways

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

CROWDSOURCING RESEARCH

We have been meaning to post, for quite some time, about this very interesting report from Nature entitled Crowdsourced research: Many hands make tight work. In it, the authors describe how a finding of theirs didn’t hold up when re-analyzed by the Uri Simonsohn. Instead of digging in their heels, they admitted Uri was right and realized there’s wisdom in having other people take a run at analyzing a data set as they might discover better ways of doing things.

They wondered if, in a wisdom-of-the-crowds fashion, whether aggregating multiple, independent analyses might lead to better conclusions. (We at Decision Science News would expect such an effect would be enhanced when working with a selected crowd of analysts.)

The authors recruited 29 groups of researchers to analyze a single data set concerning soccer penalties and the race of players. The figure at the top of this post shows how the different groups arrived at many different estimates (with different confidences) but about 70% of teams found a significant, positive relationship.

It’s fascinating stuff. The comment is here and the paper by the 29 groups of researchers is here.