How many digits into pi do you need to go to find your birthday?

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

FIND YOUR BIRTHDAY IN PI, IN THREE DIFFERENT FORMATS

It was Pi Day (March 14, like 3/14, like 3.14, get it?) recently and Time Magazine did a fun interactive app in which you can find your birthday inside the digits of pi.

However:

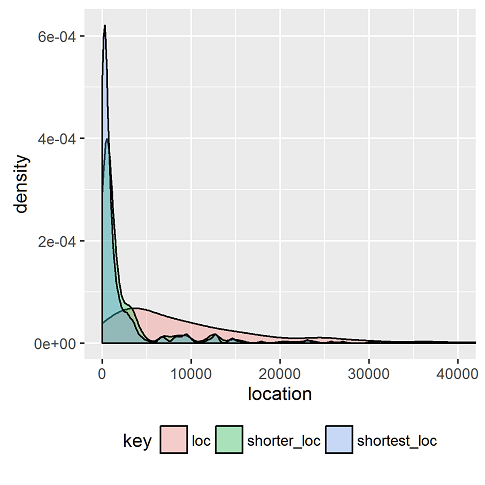

1) They only used one format of birthday, in which July 4th would be 704. But there are other ways to write July 4. Like 0704 or 74. And those must be investigated, right?

2) Our friend Dave Pennock said he wanted to see the locations of every possible birthday inside pi.

So here they are. Enjoy! And R code below if you are interested. Plot and other stuff use Hadley Wickham’s Tidyverse tools.

And what the heck, have a spreadsheet of fun (i.e., spreadsheet with all the birthdays and locations in it). And here are 100,000 digits of pi, too.

ADDENDUM

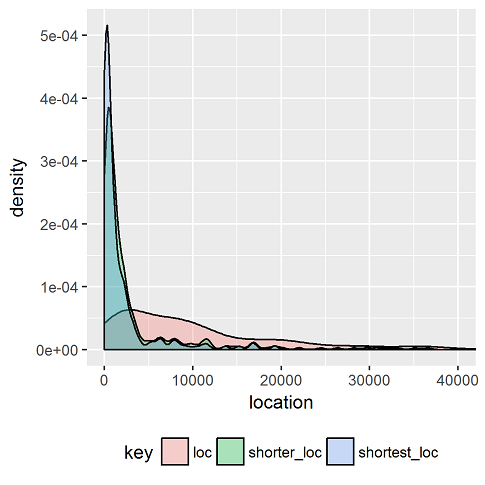

Just for completeness, let’s redo that plot using 100,000 random numbers instead of the digits of pi.

Yep, pretty similar!

| Birthday | location | Shorter form | location | Shortest form | loc |

| 01-01 | 2563 | 1-01 | 853 | 1-1 | 95 |

| 01-02 | 6599 | 1-02 | 164 | 1-2 | 149 |

| 01-03 | 25991 | 1-03 | 3487 | 1-3 | 111 |

| 01-04 | 1219 | 1-04 | 270 | 1-4 | 2 |

| 01-05 | 3982 | 1-05 | 50 | 1-5 | 4 |

| 01-06 | 1011 | 1-06 | 1012 | 1-6 | 41 |

| 01-07 | 23236 | 1-07 | 1488 | 1-7 | 96 |

| 01-08 | 16216 | 1-08 | 2535 | 1-8 | 425 |

| 01-09 | 4555 | 1-09 | 207 | 1-9 | 38 |

| 01-10 | 3253 | 1-10 | 175 | 1-10 | 175 |

| 01-11 | 19627 | 1-11 | 154 | 1-11 | 154 |

| 01-12 | 4449 | 1-12 | 710 | 1-12 | 710 |

| 01-13 | 362 | 1-13 | 363 | 1-13 | 363 |

| 01-14 | 4678 | 1-14 | 2725 | 1-14 | 2725 |

| 01-15 | 27576 | 1-15 | 922 | 1-15 | 922 |

| 01-16 | 5776 | 1-16 | 396 | 1-16 | 396 |

| 01-17 | 6290 | 1-17 | 95 | 1-17 | 95 |

| 01-18 | 7721 | 1-18 | 446 | 1-18 | 446 |

| 01-19 | 494 | 1-19 | 495 | 1-19 | 495 |

| 01-20 | 43172 | 1-20 | 244 | 1-20 | 244 |

| 01-21 | 6305 | 1-21 | 711 | 1-21 | 711 |

| 01-22 | 660 | 1-22 | 484 | 1-22 | 484 |

| 01-23 | 27847 | 1-23 | 1925 | 1-23 | 1925 |

| 01-24 | 8619 | 1-24 | 1081 | 1-24 | 1081 |

| 01-25 | 15458 | 1-25 | 1351 | 1-25 | 1351 |

| 01-26 | 4372 | 1-26 | 2014 | 1-26 | 2014 |

| 01-27 | 9693 | 1-27 | 298 | 1-27 | 298 |

| 01-28 | 1199 | 1-28 | 149 | 1-28 | 149 |

| 01-29 | 2341 | 1-29 | 500 | 1-29 | 500 |

| 01-30 | 5748 | 1-30 | 745 | 1-30 | 745 |

| 01-31 | 5742 | 1-31 | 1097 | 1-31 | 1097 |

| 02-01 | 9803 | 2-01 | 245 | 2-1 | 94 |

| 02-02 | 7287 | 2-02 | 1514 | 2-2 | 136 |

| 02-03 | 3831 | 2-03 | 1051 | 2-3 | 17 |

| 02-04 | 28185 | 2-04 | 375 | 2-4 | 293 |

| 02-05 | 12631 | 2-05 | 1327 | 2-5 | 90 |

| 02-06 | 9809 | 2-06 | 885 | 2-6 | 7 |

| 02-07 | 14839 | 2-07 | 2374 | 2-7 | 29 |

| 02-08 | 6141 | 2-08 | 77 | 2-8 | 34 |

| 02-09 | 24361 | 2-09 | 54 | 2-9 | 187 |

| 02-10 | 9918 | 2-10 | 1318 | 2-10 | 1318 |

| 02-11 | 4353 | 2-11 | 94 | 2-11 | 94 |

| 02-12 | 30474 | 2-12 | 712 | 2-12 | 712 |

| 02-13 | 524 | 2-13 | 281 | 2-13 | 281 |

| 02-14 | 10340 | 2-14 | 103 | 2-14 | 103 |

| 02-15 | 24666 | 2-15 | 3099 | 2-15 | 3099 |

| 02-16 | 4384 | 2-16 | 992 | 2-16 | 992 |

| 02-17 | 546 | 2-17 | 547 | 2-17 | 547 |

| 02-18 | 2866 | 2-18 | 424 | 2-18 | 424 |

| 02-19 | 716 | 2-19 | 717 | 2-19 | 717 |

| 02-20 | 15715 | 2-20 | 1911 | 2-20 | 1911 |

| 02-21 | 17954 | 2-21 | 1737 | 2-21 | 1737 |

| 02-22 | 1889 | 2-22 | 1736 | 2-22 | 1736 |

| 02-23 | 25113 | 2-23 | 136 | 2-23 | 136 |

| 02-24 | 12782 | 2-24 | 536 | 2-24 | 536 |

| 02-25 | 3019 | 2-25 | 1071 | 2-25 | 1071 |

| 02-26 | 6742 | 2-26 | 965 | 2-26 | 965 |

| 02-27 | 18581 | 2-27 | 485 | 2-27 | 485 |

| 02-28 | 4768 | 2-28 | 2528 | 2-28 | 2528 |

| 02-29 | 6707 | 2-29 | 186 | 2-29 | 186 |

| 03-01 | 1045 | 3-01 | 493 | 3-1 | 1 |

| 03-02 | 1439 | 3-02 | 818 | 3-2 | 16 |

| 03-03 | 15339 | 3-03 | 195 | 3-3 | 25 |

| 03-04 | 4460 | 3-04 | 4461 | 3-4 | 87 |

| 03-05 | 29985 | 3-05 | 366 | 3-5 | 10 |

| 03-06 | 3102 | 3-06 | 116 | 3-6 | 286 |

| 03-07 | 3813 | 3-07 | 65 | 3-7 | 47 |

| 03-08 | 35207 | 3-08 | 520 | 3-8 | 18 |

| 03-09 | 5584 | 3-09 | 421 | 3-9 | 44 |

| 03-10 | 7692 | 3-10 | 442 | 3-10 | 442 |

| 03-11 | 11254 | 3-11 | 846 | 3-11 | 846 |

| 03-12 | 11647 | 3-12 | 2632 | 3-12 | 2632 |

| 03-13 | 858 | 3-13 | 859 | 3-13 | 859 |

| 03-14 | 3496 | 3-14 | 1 | 3-14 | 1 |

| 03-15 | 8285 | 3-15 | 314 | 3-15 | 314 |

| 03-16 | 13008 | 3-16 | 238 | 3-16 | 238 |

| 03-17 | 4778 | 3-17 | 138 | 3-17 | 138 |

| 03-18 | 7291 | 3-18 | 797 | 3-18 | 797 |

| 03-19 | 4530 | 3-19 | 1166 | 3-19 | 1166 |

| 03-20 | 3767 | 3-20 | 600 | 3-20 | 600 |

| 03-21 | 18810 | 3-21 | 960 | 3-21 | 960 |

| 03-22 | 12744 | 3-22 | 3434 | 3-22 | 3434 |

| 03-23 | 8948 | 3-23 | 16 | 3-23 | 16 |

| 03-24 | 16475 | 3-24 | 2495 | 3-24 | 2495 |

| 03-25 | 12524 | 3-25 | 3147 | 3-25 | 3147 |

| 03-26 | 7634 | 3-26 | 275 | 3-26 | 275 |

| 03-27 | 9208 | 3-27 | 28 | 3-27 | 28 |

| 03-28 | 24341 | 3-28 | 112 | 3-28 | 112 |

| 03-29 | 16096 | 3-29 | 3333 | 3-29 | 3333 |

| 03-30 | 2568 | 3-30 | 365 | 3-30 | 365 |

| 03-31 | 13852 | 3-31 | 1128 | 3-31 | 1128 |

| 04-01 | 19625 | 4-01 | 1198 | 4-1 | 3 |

| 04-02 | 17679 | 4-02 | 1368 | 4-2 | 93 |

| 04-03 | 4776 | 4-03 | 724 | 4-3 | 24 |

| 04-04 | 1272 | 4-04 | 1273 | 4-4 | 60 |

| 04-05 | 10748 | 4-05 | 596 | 4-5 | 61 |

| 04-06 | 39577 | 4-06 | 71 | 4-6 | 20 |

| 04-07 | 4258 | 4-07 | 2008 | 4-7 | 120 |

| 04-08 | 5602 | 4-08 | 146 | 4-8 | 88 |

| 04-09 | 33757 | 4-09 | 340 | 4-9 | 58 |

| 04-10 | 11495 | 4-10 | 163 | 4-10 | 163 |

| 04-11 | 9655 | 4-11 | 2497 | 4-11 | 2497 |

| 04-12 | 20103 | 4-12 | 297 | 4-12 | 297 |

| 04-13 | 6460 | 4-13 | 1076 | 4-13 | 1076 |

| 04-14 | 3756 | 4-14 | 385 | 4-14 | 385 |

| 04-15 | 7735 | 4-15 | 3 | 4-15 | 3 |

| 04-16 | 10206 | 4-16 | 1709 | 4-16 | 1709 |

| 04-17 | 15509 | 4-17 | 1419 | 4-17 | 1419 |

| 04-18 | 7847 | 4-18 | 728 | 4-18 | 728 |

| 04-19 | 4790 | 4-19 | 37 | 4-19 | 37 |

| 04-20 | 3286 | 4-20 | 702 | 4-20 | 702 |

| 04-21 | 16028 | 4-21 | 93 | 4-21 | 93 |

| 04-22 | 4796 | 4-22 | 1840 | 4-22 | 1840 |

| 04-23 | 8220 | 4-23 | 2995 | 4-23 | 2995 |

| 04-24 | 1878 | 4-24 | 1110 | 4-24 | 1110 |

| 04-25 | 5716 | 4-25 | 822 | 4-25 | 822 |

| 04-26 | 1392 | 4-26 | 1393 | 4-26 | 1393 |

| 04-27 | 36946 | 4-27 | 630 | 4-27 | 630 |

| 04-28 | 910 | 4-28 | 203 | 4-28 | 203 |

| 04-29 | 4514 | 4-29 | 1230 | 4-29 | 1230 |

| 04-30 | 4619 | 4-30 | 519 | 4-30 | 519 |

| 05-01 | 21816 | 5-01 | 1344 | 5-1 | 49 |

| 05-02 | 25156 | 5-02 | 32 | 5-2 | 173 |

| 05-03 | 19743 | 5-03 | 838 | 5-3 | 9 |

| 05-04 | 9475 | 5-04 | 1921 | 5-4 | 192 |

| 05-05 | 36575 | 5-05 | 132 | 5-5 | 131 |

| 05-06 | 2094 | 5-06 | 1116 | 5-6 | 211 |

| 05-07 | 683 | 5-07 | 684 | 5-7 | 405 |

| 05-08 | 14658 | 5-08 | 1122 | 5-8 | 11 |

| 05-09 | 17171 | 5-09 | 1147 | 5-9 | 5 |

| 05-10 | 10274 | 5-10 | 49 | 5-10 | 49 |

| 05-11 | 444 | 5-11 | 395 | 5-11 | 395 |

| 05-12 | 2294 | 5-12 | 1843 | 5-12 | 1843 |

| 05-13 | 597 | 5-13 | 110 | 5-13 | 110 |

| 05-14 | 42236 | 5-14 | 2118 | 5-14 | 2118 |

| 05-15 | 10458 | 5-15 | 1100 | 5-15 | 1100 |

| 05-16 | 5367 | 5-16 | 3516 | 5-16 | 3516 |

| 05-17 | 24365 | 5-17 | 2109 | 5-17 | 2109 |

| 05-18 | 751 | 5-18 | 471 | 5-18 | 471 |

| 05-19 | 17096 | 5-19 | 390 | 5-19 | 390 |

| 05-20 | 20247 | 5-20 | 326 | 5-20 | 326 |

| 05-21 | 19553 | 5-21 | 173 | 5-21 | 173 |

| 05-22 | 2041 | 5-22 | 535 | 5-22 | 535 |

| 05-23 | 4317 | 5-23 | 579 | 5-23 | 579 |

| 05-24 | 15635 | 5-24 | 1615 | 5-24 | 1615 |

| 05-25 | 3233 | 5-25 | 1006 | 5-25 | 1006 |

| 05-26 | 3984 | 5-26 | 613 | 5-26 | 613 |

| 05-27 | 7300 | 5-27 | 241 | 5-27 | 241 |

| 05-28 | 18917 | 5-28 | 867 | 5-28 | 867 |

| 05-29 | 6373 | 5-29 | 1059 | 5-29 | 1059 |

| 05-30 | 367 | 5-30 | 368 | 5-30 | 368 |

| 05-31 | 26607 | 5-31 | 2871 | 5-31 | 2871 |

| 06-01 | 2400 | 6-01 | 1218 | 6-1 | 220 |

| 06-02 | 14854 | 6-02 | 291 | 6-2 | 21 |

| 06-03 | 9019 | 6-03 | 264 | 6-3 | 313 |

| 06-04 | 1172 | 6-04 | 1173 | 6-4 | 23 |

| 06-05 | 5681 | 6-05 | 750 | 6-5 | 8 |

| 06-06 | 13163 | 6-06 | 311 | 6-6 | 118 |

| 06-07 | 10861 | 6-07 | 287 | 6-7 | 99 |

| 06-08 | 13912 | 6-08 | 618 | 6-8 | 606 |

| 06-09 | 3376 | 6-09 | 128 | 6-9 | 42 |

| 06-10 | 13704 | 6-10 | 269 | 6-10 | 269 |

| 06-11 | 7448 | 6-11 | 427 | 6-11 | 427 |

| 06-12 | 11585 | 6-12 | 220 | 6-12 | 220 |

| 06-13 | 7490 | 6-13 | 971 | 6-13 | 971 |

| 06-14 | 2887 | 6-14 | 1612 | 6-14 | 1612 |

| 06-15 | 25066 | 6-15 | 1030 | 6-15 | 1030 |

| 06-16 | 6017 | 6-16 | 1206 | 6-16 | 1206 |

| 06-17 | 886 | 6-17 | 887 | 6-17 | 887 |

| 06-18 | 14424 | 6-18 | 1444 | 6-18 | 1444 |

| 06-19 | 6351 | 6-19 | 843 | 6-19 | 843 |

| 06-20 | 14967 | 6-20 | 76 | 6-20 | 76 |

| 06-21 | 2819 | 6-21 | 2820 | 6-21 | 2820 |

| 06-22 | 30206 | 6-22 | 185 | 6-22 | 185 |

| 06-23 | 51529 | 6-23 | 456 | 6-23 | 456 |

| 06-24 | 5491 | 6-24 | 510 | 6-24 | 510 |

| 06-25 | 5971 | 6-25 | 1569 | 6-25 | 1569 |

| 06-26 | 16707 | 6-26 | 21 | 6-26 | 21 |

| 06-27 | 5424 | 6-27 | 462 | 6-27 | 462 |

| 06-28 | 72 | 6-28 | 73 | 6-28 | 73 |

| 06-29 | 11928 | 6-29 | 571 | 6-29 | 571 |

| 06-30 | 10902 | 6-30 | 1900 | 6-30 | 1900 |

| 07-01 | 10640 | 7-01 | 167 | 7-1 | 40 |

| 07-02 | 544 | 7-02 | 545 | 7-2 | 140 |

| 07-03 | 10108 | 7-03 | 408 | 7-3 | 300 |

| 07-04 | 9882 | 7-04 | 2670 | 7-4 | 57 |

| 07-05 | 9233 | 7-05 | 561 | 7-5 | 48 |

| 07-06 | 5134 | 7-06 | 97 | 7-6 | 570 |

| 07-07 | 3815 | 7-07 | 755 | 7-7 | 560 |

| 07-08 | 8111 | 7-08 | 3061 | 7-8 | 67 |

| 07-09 | 3641 | 7-09 | 121 | 7-9 | 14 |

| 07-10 | 3169 | 7-10 | 681 | 7-10 | 681 |

| 07-11 | 4250 | 7-11 | 1185 | 7-11 | 1185 |

| 07-12 | 7695 | 7-12 | 243 | 7-12 | 243 |

| 07-13 | 36438 | 7-13 | 627 | 7-13 | 627 |

| 07-14 | 9546 | 7-14 | 610 | 7-14 | 610 |

| 07-15 | 5668 | 7-15 | 344 | 7-15 | 344 |

| 07-16 | 10242 | 7-16 | 40 | 7-16 | 40 |

| 07-17 | 15743 | 7-17 | 568 | 7-17 | 568 |

| 07-18 | 2988 | 7-18 | 2776 | 7-18 | 2776 |

| 07-19 | 8664 | 7-19 | 541 | 7-19 | 541 |

| 07-20 | 44221 | 7-20 | 1009 | 7-20 | 1009 |

| 07-21 | 756 | 7-21 | 650 | 7-21 | 650 |

| 07-22 | 2375 | 7-22 | 2140 | 7-22 | 2140 |

| 07-23 | 3300 | 7-23 | 1632 | 7-23 | 1632 |

| 07-24 | 8811 | 7-24 | 302 | 7-24 | 302 |

| 07-25 | 16244 | 7-25 | 140 | 7-25 | 140 |

| 07-26 | 288 | 7-26 | 289 | 7-26 | 289 |

| 07-27 | 12328 | 7-27 | 406 | 7-27 | 406 |

| 07-28 | 4879 | 7-28 | 1584 | 7-28 | 1584 |

| 07-29 | 4078 | 7-29 | 771 | 7-29 | 771 |

| 07-30 | 6892 | 7-30 | 898 | 7-30 | 898 |

| 07-31 | 2808 | 7-31 | 785 | 7-31 | 785 |

| 08-01 | 9508 | 8-01 | 3418 | 8-1 | 68 |

| 08-02 | 18639 | 8-02 | 1993 | 8-2 | 53 |

| 08-03 | 8283 | 8-03 | 85 | 8-3 | 27 |

| 08-04 | 1284 | 8-04 | 775 | 8-4 | 19 |

| 08-05 | 2292 | 8-05 | 957 | 8-5 | 172 |

| 08-06 | 9289 | 8-06 | 968 | 8-6 | 75 |

| 08-07 | 15787 | 8-07 | 451 | 8-7 | 306 |

| 08-08 | 4545 | 8-08 | 106 | 8-8 | 35 |

| 08-09 | 3009 | 8-09 | 1003 | 8-9 | 12 |

| 08-10 | 3207 | 8-10 | 206 | 8-10 | 206 |

| 08-11 | 10884 | 8-11 | 153 | 8-11 | 153 |

| 08-12 | 147 | 8-12 | 148 | 8-12 | 148 |

| 08-13 | 10981 | 8-13 | 734 | 8-13 | 734 |

| 08-14 | 4274 | 8-14 | 882 | 8-14 | 882 |

| 08-15 | 25257 | 8-15 | 324 | 8-15 | 324 |

| 08-16 | 1601 | 8-16 | 68 | 8-16 | 68 |

| 08-17 | 4857 | 8-17 | 319 | 8-17 | 319 |

| 08-18 | 30399 | 8-18 | 490 | 8-18 | 490 |

| 08-19 | 12786 | 8-19 | 198 | 8-19 | 198 |

| 08-20 | 4787 | 8-20 | 53 | 8-20 | 53 |

| 08-21 | 12923 | 8-21 | 102 | 8-21 | 102 |

| 08-22 | 5149 | 8-22 | 135 | 8-22 | 135 |

| 08-23 | 11957 | 8-23 | 114 | 8-23 | 114 |

| 08-24 | 10358 | 8-24 | 1196 | 8-24 | 1196 |

| 08-25 | 828 | 8-25 | 89 | 8-25 | 89 |

| 08-26 | 4294 | 8-26 | 2059 | 8-26 | 2059 |

| 08-27 | 619 | 8-27 | 620 | 8-27 | 620 |

| 08-28 | 24614 | 8-28 | 2381 | 8-28 | 2381 |

| 08-29 | 1123 | 8-29 | 335 | 8-29 | 335 |

| 08-30 | 816 | 8-30 | 492 | 8-30 | 492 |

| 08-31 | 25355 | 8-31 | 237 | 8-31 | 237 |

| 09-01 | 658 | 9-01 | 659 | 9-1 | 250 |

| 09-02 | 2074 | 9-02 | 715 | 9-2 | 6 |

| 09-03 | 6335 | 9-03 | 357 | 9-3 | 15 |

| 09-04 | 8107 | 9-04 | 909 | 9-4 | 59 |

| 09-05 | 23683 | 9-05 | 3191 | 9-5 | 31 |

| 09-06 | 8297 | 9-06 | 1294 | 9-6 | 181 |

| 09-07 | 2986 | 9-07 | 543 | 9-7 | 13 |

| 09-08 | 7828 | 9-08 | 815 | 9-8 | 81 |

| 09-09 | 12077 | 9-09 | 248 | 9-9 | 45 |

| 09-10 | 17009 | 9-10 | 2874 | 9-10 | 2874 |

| 09-11 | 6125 | 9-11 | 1534 | 9-11 | 1534 |

| 09-12 | 29379 | 9-12 | 483 | 9-12 | 483 |

| 09-13 | 17515 | 9-13 | 1096 | 9-13 | 1096 |

| 09-14 | 249 | 9-14 | 250 | 9-14 | 250 |

| 09-15 | 3413 | 9-15 | 1315 | 9-15 | 1315 |

| 09-16 | 5930 | 9-16 | 3370 | 9-16 | 3370 |

| 09-17 | 341 | 9-17 | 342 | 9-17 | 342 |

| 09-18 | 4731 | 9-18 | 2220 | 9-18 | 2220 |

| 09-19 | 2127 | 9-19 | 417 | 9-19 | 417 |

| 09-20 | 328 | 9-20 | 329 | 9-20 | 329 |

| 09-21 | 422 | 9-21 | 423 | 9-21 | 423 |

| 09-22 | 7963 | 9-22 | 687 | 9-22 | 687 |

| 09-23 | 23216 | 9-23 | 63 | 9-23 | 63 |

| 09-24 | 5122 | 9-24 | 1430 | 9-24 | 1430 |

| 09-25 | 4008 | 9-25 | 337 | 9-25 | 337 |

| 09-26 | 5380 | 9-26 | 6 | 9-26 | 6 |

| 09-27 | 1177 | 9-27 | 977 | 9-27 | 977 |

| 09-28 | 5768 | 9-28 | 2192 | 9-28 | 2192 |

| 09-29 | 7785 | 9-29 | 1854 | 9-29 | 1854 |

| 09-30 | 1494 | 9-30 | 194 | 9-30 | 194 |

| 10-01 | 15762 | 10-01 | 15762 | 10-1 | 853 |

| 10-02 | 6740 | 10-02 | 6740 | 10-2 | 164 |

| 10-03 | 12292 | 10-03 | 12292 | 10-3 | 3487 |

| 10-04 | 3849 | 10-04 | 3849 | 10-4 | 270 |

| 10-05 | 2881 | 10-05 | 2881 | 10-5 | 50 |

| 10-06 | 3481 | 10-06 | 3481 | 10-6 | 1012 |

| 10-07 | 4076 | 10-07 | 4076 | 10-7 | 1488 |

| 10-08 | 8281 | 10-08 | 8281 | 10-8 | 2535 |

| 10-09 | 1817 | 10-09 | 1817 | 10-9 | 207 |

| 10-10 | 853 | 10-10 | 853 | 10-10 | 853 |

| 10-11 | 3846 | 10-11 | 3846 | 10-11 | 3846 |

| 10-12 | 8618 | 10-12 | 8618 | 10-12 | 8618 |

| 10-13 | 8271 | 10-13 | 8271 | 10-13 | 8271 |

| 10-14 | 2781 | 10-14 | 2781 | 10-14 | 2781 |

| 10-15 | 2564 | 10-15 | 2564 | 10-15 | 2564 |

| 10-16 | 9987 | 10-16 | 9987 | 10-16 | 9987 |

| 10-17 | 8041 | 10-17 | 8041 | 10-17 | 8041 |

| 10-18 | 1224 | 10-18 | 1224 | 10-18 | 1224 |

| 10-19 | 15483 | 10-19 | 15483 | 10-19 | 15483 |

| 10-20 | 9808 | 10-20 | 9808 | 10-20 | 9808 |

| 10-21 | 2750 | 10-21 | 2750 | 10-21 | 2750 |

| 10-22 | 6400 | 10-22 | 6400 | 10-22 | 6400 |

| 10-23 | 6771 | 10-23 | 6771 | 10-23 | 6771 |

| 10-24 | 12736 | 10-24 | 12736 | 10-24 | 12736 |

| 10-25 | 12926 | 10-25 | 12926 | 10-25 | 12926 |

| 10-26 | 14679 | 10-26 | 14679 | 10-26 | 14679 |

| 10-27 | 164 | 10-27 | 164 | 10-27 | 164 |

| 10-28 | 3242 | 10-28 | 3242 | 10-28 | 3242 |

| 10-29 | 8197 | 10-29 | 8197 | 10-29 | 8197 |

| 10-30 | 20819 | 10-30 | 20819 | 10-30 | 20819 |

| 10-31 | 3495 | 10-31 | 3495 | 10-31 | 3495 |

| 11-01 | 2780 | 11-01 | 2780 | 11-1 | 154 |

| 11-02 | 12721 | 11-02 | 12721 | 11-2 | 710 |

| 11-03 | 3494 | 11-03 | 3494 | 11-3 | 363 |

| 11-04 | 23842 | 11-04 | 23842 | 11-4 | 2725 |

| 11-05 | 175 | 11-05 | 175 | 11-5 | 922 |

| 11-06 | 13461 | 11-06 | 13461 | 11-6 | 396 |

| 11-07 | 21819 | 11-07 | 21819 | 11-7 | 95 |

| 11-08 | 7450 | 11-08 | 7450 | 11-8 | 446 |

| 11-09 | 3254 | 11-09 | 3254 | 11-9 | 495 |

| 11-10 | 22898 | 11-10 | 22898 | 11-10 | 22898 |

| 11-11 | 12701 | 11-11 | 12701 | 11-11 | 12701 |

| 11-12 | 12702 | 11-12 | 12702 | 11-12 | 12702 |

| 11-13 | 3504 | 11-13 | 3504 | 11-13 | 3504 |

| 11-14 | 23209 | 11-14 | 23209 | 11-14 | 23209 |

| 11-15 | 27054 | 11-15 | 27054 | 11-15 | 27054 |

| 11-16 | 3993 | 11-16 | 3993 | 11-16 | 3993 |

| 11-17 | 154 | 11-17 | 154 | 11-17 | 154 |

| 11-18 | 14376 | 11-18 | 14376 | 11-18 | 14376 |

| 11-19 | 984 | 11-19 | 984 | 11-19 | 984 |

| 11-20 | 3823 | 11-20 | 3823 | 11-20 | 3823 |

| 11-21 | 710 | 11-21 | 710 | 11-21 | 710 |

| 11-22 | 12018 | 11-22 | 12018 | 11-22 | 12018 |

| 11-23 | 6548 | 11-23 | 6548 | 11-23 | 6548 |

| 11-24 | 25705 | 11-24 | 25705 | 11-24 | 25705 |

| 11-25 | 1350 | 11-25 | 1350 | 11-25 | 1350 |

| 11-26 | 12703 | 11-26 | 12703 | 11-26 | 12703 |

| 11-27 | 4252 | 11-27 | 4252 | 11-27 | 4252 |

| 11-28 | 14719 | 11-28 | 14719 | 11-28 | 14719 |

| 11-29 | 4450 | 11-29 | 4450 | 11-29 | 4450 |

| 11-30 | 9107 | 11-30 | 9107 | 11-30 | 9107 |

| 12-01 | 244 | 12-01 | 244 | 12-1 | 711 |

| 12-02 | 19942 | 12-02 | 19942 | 12-2 | 484 |

| 12-03 | 60873 | 12-03 | 60873 | 12-3 | 1925 |

| 12-04 | 11885 | 12-04 | 11885 | 12-4 | 1081 |

| 12-05 | 3329 | 12-05 | 3329 | 12-5 | 1351 |

| 12-06 | 3259 | 12-06 | 3259 | 12-6 | 2014 |

| 12-07 | 7511 | 12-07 | 7511 | 12-7 | 298 |

| 12-08 | 3714 | 12-08 | 3714 | 12-8 | 149 |

| 12-09 | 4729 | 12-09 | 4729 | 12-9 | 500 |

| 12-10 | 3456 | 12-10 | 3456 | 12-10 | 3456 |

| 12-11 | 50289 | 12-11 | 50289 | 12-11 | 50289 |

| 12-12 | 711 | 12-12 | 711 | 12-12 | 711 |

| 12-13 | 47502 | 12-13 | 47502 | 12-13 | 47502 |

| 12-14 | 4560 | 12-14 | 4560 | 12-14 | 4560 |

| 12-15 | 11942 | 12-15 | 11942 | 12-15 | 11942 |

| 12-16 | 6986 | 12-16 | 6986 | 12-16 | 6986 |

| 12-17 | 11078 | 12-17 | 11078 | 12-17 | 11078 |

| 12-18 | 9167 | 12-18 | 9167 | 12-18 | 9167 |

| 12-19 | 1426 | 12-19 | 1426 | 12-19 | 1426 |

| 12-20 | 36498 | 12-20 | 36498 | 12-20 | 36498 |

| 12-21 | 8732 | 12-21 | 8732 | 12-21 | 8732 |

| 12-22 | 17882 | 12-22 | 17882 | 12-22 | 17882 |

| 12-23 | 9550 | 12-23 | 9550 | 12-23 | 9550 |

| 12-24 | 661 | 12-24 | 661 | 12-24 | 661 |

| 12-25 | 9417 | 12-25 | 9417 | 12-25 | 9417 |

| 12-26 | 964 | 12-26 | 964 | 12-26 | 964 |

| 12-27 | 484 | 12-27 | 484 | 12-27 | 484 |

| 12-28 | 5183 | 12-28 | 5183 | 12-28 | 5183 |

| 12-29 | 30418 | 12-29 | 30418 | 12-29 | 30418 |

| 12-30 | 7146 | 12-30 | 7146 | 12-30 | 7146 |

| 12-31 | 9451 | 12-31 | 9451 | 12-31 | 9451 |

R CODE

library(ggplot2)

library(readr)

library(stringr)

library(tidyr)

pistring = read_file("pi.txt")

#choose 2016 b/c it's a leap year

dayvec = seq(as.Date('2016-01-01'), as.Date('2016-12-31'), by = 1)

dayvec = str_sub(dayvec, start = 6, end = 10)

shorterdayvec = sub("0(.-.)", "\\1", dayvec)

shortestdayvec = sub("(.-)0(.)$", "\\1\\2", shorterdayvec)

df = data.frame(

birthday = dayvec,

loc = str_locate(pistring, gsub("-", "", dayvec))[, 1],

shorter_bday = shorterdayvec,

shorter_loc = str_locate(pistring, gsub("-", "", shorterdayvec))[, 1],

shortest_bday = shortestdayvec,

shortest_loc = str_locate(pistring, gsub("-", "", shortestdayvec))[, 1]

)

write.csv(df, file = "birthdays_in_pi.csv", row.names = FALSE)

long_df = df %>% gather(key, location, loc, shorter_loc, shortest_loc)

p = ggplot(long_df, aes(x = location, fill = key))

p = p + geom_density(alpha = .3)

p = p + coord_cartesian(xlim = c(0, 40000))

p = p + theme(legend.position = "bottom")

p

ggsave(

plot = p,

file = "birthdays_in_pi.pdf",

height = 4,

width = 4

)

#now repeat with random numbers

randstring = paste(replicate(100000, {

floor(10 * runif(1))

}), collapse = "")

#check our work

table(str_split(randstring,""))

df_rand = data.frame(

birthday = dayvec,

loc = str_locate(randstring, gsub("-", "", dayvec))[, 1],

shorter_bday = shorterdayvec,

shorter_loc = str_locate(randstring, gsub("-", "", shorterdayvec))[, 1],

shortest_bday = shortestdayvec,

shortest_loc = str_locate(randstring, gsub("-", "", shortestdayvec))[, 1]

)

long_df_rand = df_rand %>% gather(key, location, loc, shorter_loc, shortest_loc)

p = ggplot(long_df_rand, aes(x = location, fill = key))

p = p + geom_density(alpha = .3)

p = p + coord_cartesian(xlim = c(0, 40000))

p = p + theme(legend.position = "bottom")

p

ggsave(

plot = p,

file = "birthdays_in_random.png",

height = 4,

width = 4

)