Heuristica: An R package for testing models of binary choice

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

Subscribe to Decision Science News by Email (one email per week, easy unsubscribe)

CLASSIC HEURISTICS AND DATA SETS ALL IN ONE TIDY PACKAGE

It just got a lot easier to simulate the performance of simple heuristics.

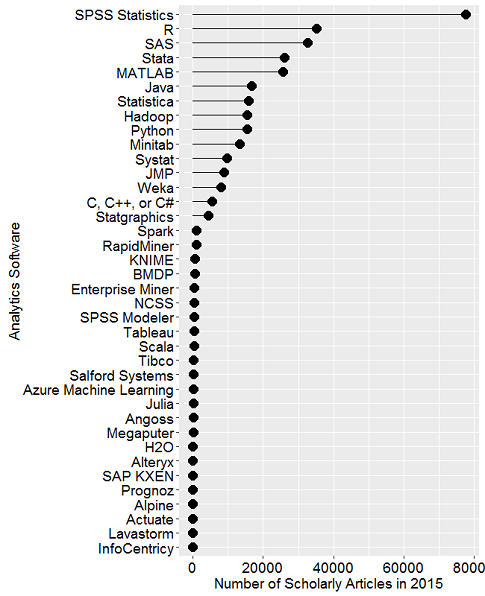

Jean Czerlinski Whitmore, a software engineer at Google with a long history in modeling cognition, and Daniel Barkoczi, a postdoctoral fellow at the Max Planck Institute for Human Development, have created heuristica: an R package to model the performance of simple heuristics. It comprises the heuristics covered in the first chapters of Simple Heuristics That Make Us Smart such as Take The Best, Unit Weighted Linear model, and more. The package also includes data, such as the the original German cities data set which has become a benchmark for testing heuristic models of choice, cited in hundreds of papers.

A good place to start is the README vignette, as with vignettes:

- Comparing the performance of simple heuristics using the Heuristica R package

- How to make your own heuristic

- Reproducing Results (Take The Best vs. regression on city population)

Here’s the heuristica package’s home on CRAN and here’s a description of the package in the authors’ own words:

The heuristica R package implements heuristic decision models, such as Take The Best (TTB) and a unit-weighted linear model. The models are designed for two-alternative choice tasks, such as which of two schools has a higher drop-out rate. The package also wraps more well-known models like regression and logistic regression into the two-alternative choice framework so all these models can be assessed side-by-side. It provides functions to measure accuracy, such as an overall

percentCorrectand, for advanced users, some confusion matrix functions. These measures can be applied in-sample or out-of-sample.The goal is to make it easy to explore the range of conditions in which simple heuristics are better than more complex models. Optimizing is not always better!